Developers with no data science experience are now able to integrate Machine Learning (ML) with IoT. As the number of IoT endpoints proliferate, the need for organizations to understand how to architect machine learning with IoT will grow rapidly. However, for this to occur, IoT architects and data scientists must overcome the challenge of having two very different disciplines collaborate closely to design an ML-powered IoT system.

IoT architects often focus on IoT infrastructure (e.g., IoT endpoints, gateways and platforms) and defer consideration of how they will integrate ML inference into their design. They may not be familiar with ML well enough to know when it could help them solve their business problems. That means they neglect the opportunity to use ML when it would be useful. They also lack sufficient knowledge of data science technology and terminology to understand how to deal with the challenge of ML integration.

Data scientists often focus on building ML models (e.g., data preparation, training and algorithms) and neglect consideration of how the models should be integrated with operational systems. They often lack sufficient knowledge of IoT technology and design to understand how the integration of ML will impact IoT architecture.

Role of The Machine Learning Inference Server

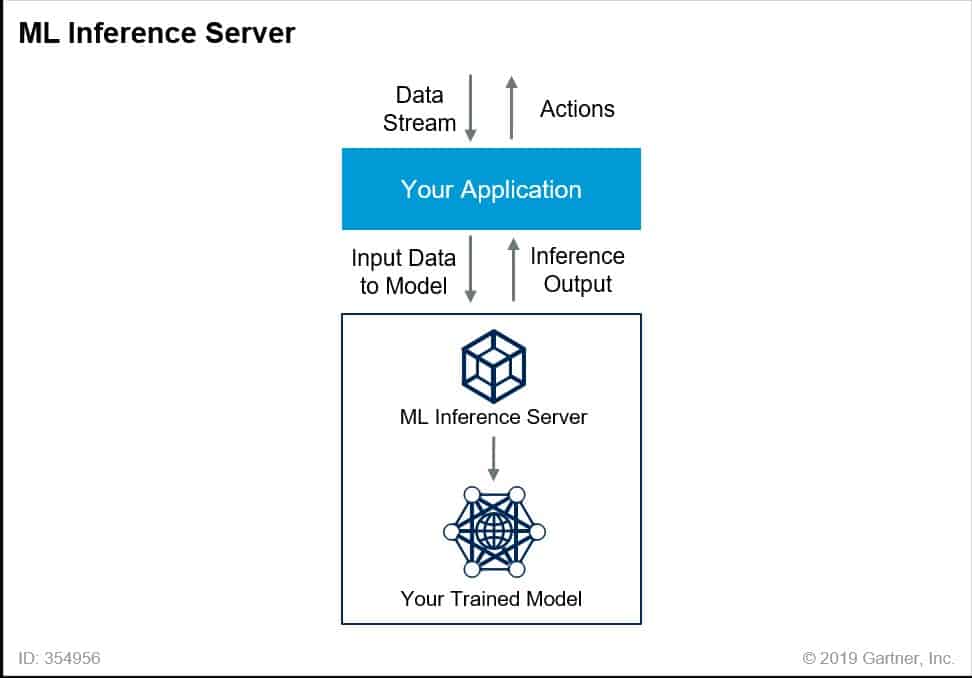

An important development in machine learning is the emergence of ML inference servers (aka inference engines and inference servers). The ML inference server executes the model algorithm and returns the inference output (see Figure).

Source: Gartner

The ML inference server accepts input data from IoT devices, passes the data to a trained ML model, executes the model and returns the inference output.

The ML inference server requires that your ML model creation tools export the model in a specific file format that the server understands. For instance, the Apple Core ML inference server can only understand models that are stored in the .mlmodel file format. Perhaps you plan to deploy a model to the Apple Core ML inference server, but your data science team used TensorFlow to create the model. In that case, you will need to use the TensorFlow conversion tool to convert the model to the .mlmodel file format.

The Open Neural Network Exchange Format (ONNX) will help to improve file format interoperability between ML inference servers and model training environments. ONNX is an open format to represent deep-learning models. There will be greater portability of models between tools and ML inference servers as vendors increasingly support ONNX.

New Gartner Research

New research from Gartner helps technical professionals overcome the challenge of integrating ML with IoT. It analyses four reference architectures and ML inference server technologies. IoT architects and data scientists can use this research to improve cross-domain collaboration, analyse ML integration trade-offs and accelerate system design. Each reference architecture can be used as the basis of a high-level design or can be combined to form a hybrid design.

First published on Gartner Blog Network